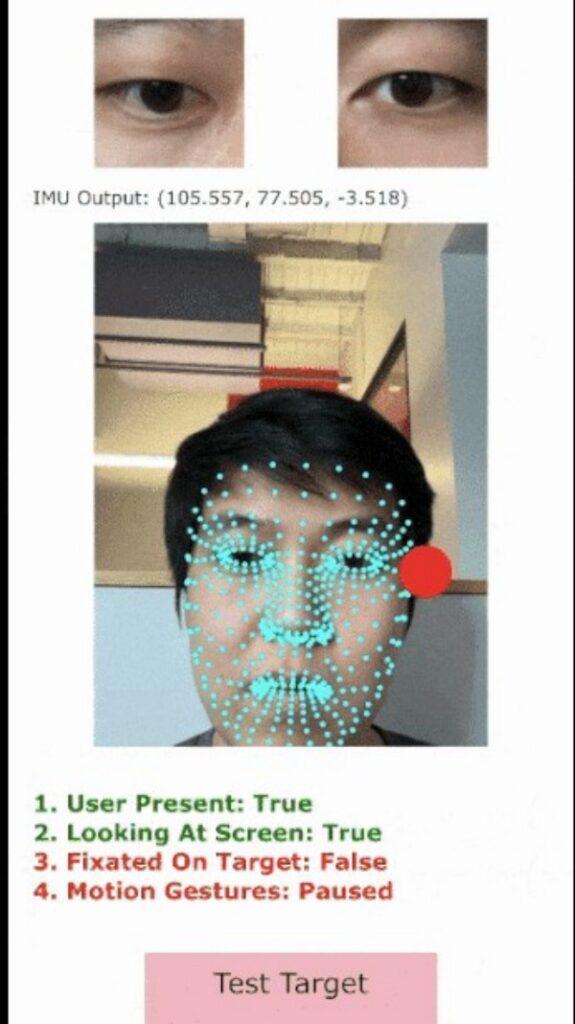

This repository (linked to post) houses the code for the paper EyeMU Interactions: Gaze + IMU Gestures on Mobile Devices. They explored the combination of gaze-tracking and motion gestures on the phone to enable enhanced single-handed interaction with mobile devices. You can see links to their open-access paper for further details, as well as their demo video.

I actually tested this on a desktop webcam, and even that actually works for gaze tracking (to some extent).

The biggest problem, for me anyway, is remembering what gestures to use, so an app to help practice a bit (like a game) can really help get into that muscle memory type approach. Eye tracking does seem quite natural, and I like the way they use the eye tracking to eliminate false positives with gestures.

At the link below, are some links to the demo sites you can try out from your mobile device (hint go for the Playground link to avoid very lengthy calibrations), no software to be installed.

See https://github.com/FIGLAB/EyeMU

#technology #opensource #assistivetechnology #gestures