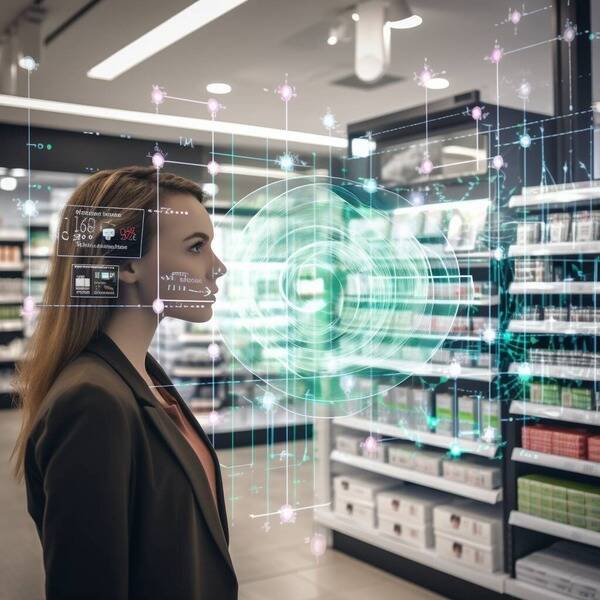

It’s one thing just having CCTV cameras, but another thing when your face, regularity of visits, etc become part of a massive database. Too often we’ve seen such databases also been sold for profit. At some point, a line gets crossed as to what is acceptable to most people.

According to the US FTC, the retailer “failed to implement reasonable procedures and prevent harm to consumers in its use of facial recognition technology in hundreds of stores.”

The company’s facial recognition database was built by two companies contracted by Rite Aid and included tens of thousands of individuals. Many, the FTC disclosed, were low quality and came from store security cameras, employee phones, and even news stories.

I get that they’re trying to prevent crime, but firstly the number of false positives actually caused the problem here with the FTC, and secondly you have to worry about those two contracted companies and what they’re up to (previous issues around privacy leaks of data often involved 3rd party companies).

Although this involved a retail company, unfortunately many governments also collect masses of citizen data including biometrics, and their security controls are typically the most lax of all.

It is the era we live in today, so this is happening more and more, the worrying thing is that this data is really not that safe, whether being exposed by hacks or one of the parties willingly selling the data for profit.

In October 2023, the company filed for Chapter 11 bankruptcy thanks to a large debt load, slumping sales, and thousands of lawsuits alleging involvement in the opioid crisis. I like to think that ethical companies do survive in the longer term.

See https://www.theregister.com/2023/12/20/rite_aid_facial_recognition/